Accidentally, Microsoft AI Research Division Exposed 38TB of Confidential Data.

Since July 2020, Microsoft AI researchers have unintentionally disclosed 38TB of private information via a public GitHub repository.

Wiz, Cybersecurity Company

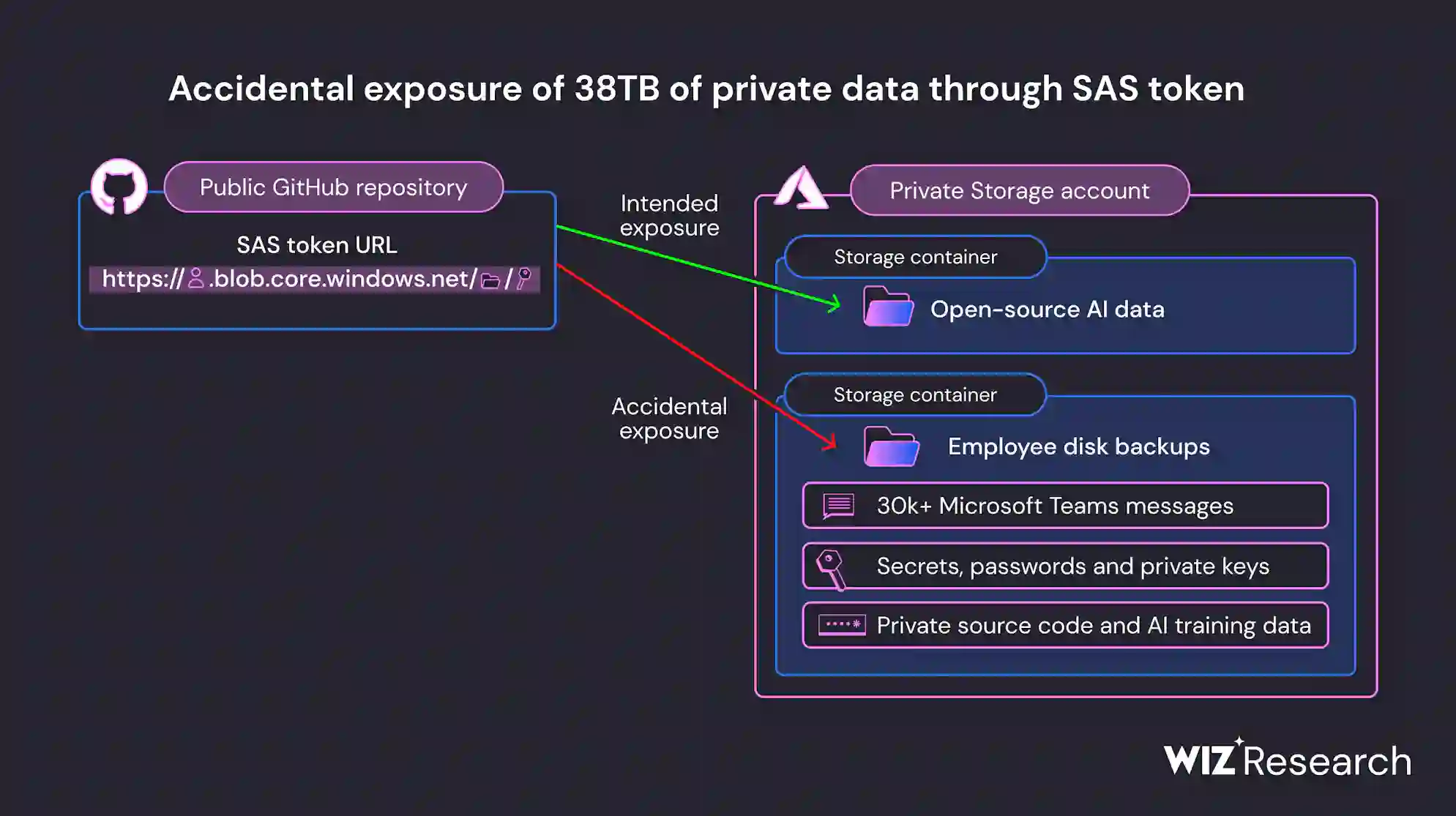

| When publishing a crate of open-source training data on GitHub, the Microsoft AI research group unintentionally exposed 38TB of critical information.

“The researchers used a SAS tokens feature in Azure, which lets you share data from Azure Storage accounts, to distribute their files. The link in this instance was set up to share the whole storage account, which included an additional 38TB of private information, even though the access level could have been restricted to just a few select files.” |

A disk backup of two employees’ workstations that contained confidential information, private keys, passwords, and more than 30,000 internal Microsoft Teams messages was made public by the revealed data.

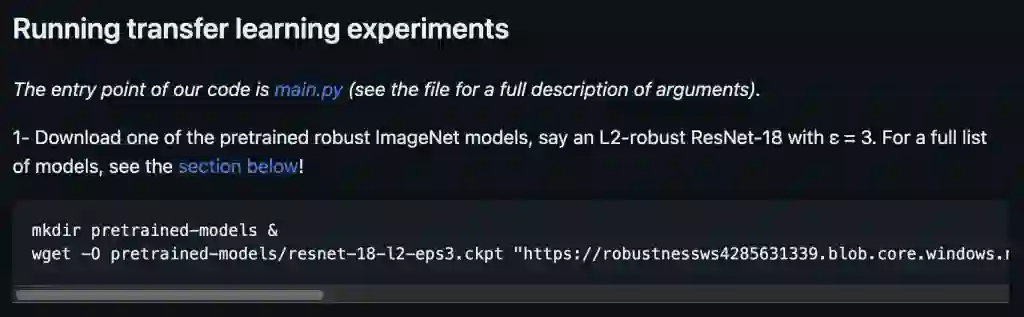

While searching the Internet for improperly configured storage containers that can expose cloud-hosted data, Wiz Research Team came upon the repository. The specialists discovered a repository on GitHub with the name robust-models-transfer under the Microsoft organization.

The repository belonged to Microsoft’s AI research team, which made open-source code and AI image recognition models available through it. In July 2020, the Microsoft AI research team began disseminating data.

Data held in Azure Storage accounts used by Microsoft’s research team was shared via Azure SAS tokens.

Private information was exposed because the Azure Storage signed URL used to access the repository was inadvertently set up to provide rights on the whole storage account.

Wiz, Cybersecurity Company

| “But access to more than just open-source models was possible with this URL. It was set up to allow permissions across the board for the storage account, unintentionally exposing more private information.

Sharing an AI dataset prompted a significant data leak that exposed over 38TB of sensitive personal information. The use of Account SAS tokens as the sharing mechanism was the primary culprit. SAS tokens are a security risk because of a lack of oversight and accountability. Hence their use should be minimized.” |

SAS tokens cannot be easily tracked, according to Wiz, because Microsoft does not offer a centralized method of managing them within the Azure site.

Microsoft

| The data lead didn’t reveal any consumer information.

“No client information was disclosed, and this problem did not endanger any internal services. Customers do not need to take any action in response to this problem.” |

The timeline of this security incident is provided below:

- 20, 2020 – First SAS token committed to GitHub; set to expire on October 5, 2021.

- 6, 2021 – Updated SAS token expiry date: October 6, 2051.

- 22, 2023 – Wiz Research discovers and informs MSRC of the problem.

- 24, 2023 – Microsoft invalidated the SAS token.

- 7, 2023 – GitHub has replaced the SAS token.

- 16, 2023 – Microsoft has finished its internal analysis of the potential effects.

- 18, 2023 – Public Release.

About The Author

Suraj Koli is a content specialist with expertise in Cybersecurity and B2B Domains. He has provided his skills for News4Hackers Blog and Craw Security. Moreover, he has written content for various sectors Business, Law, Food & Beverage, Entertainment, and many others. Koli established his center of the field in a very amazing scenario. Simply said, he started his career selling products, where he enhanced his skills in understanding the product and the point of view of clients from the customer’s perspective, which simplified his journey in the long run. It makes him an interesting personality among other writers. Currently, he is a regular writer at Craw Security.

Read More Article Here:

Engineering graduate loses Rs. 20 lakh after joining a Telegram group to look for work

3 Nigerian Suspects Detained by the Police for Online Fraud Incident in UP.