Sleepy Pickle is a Fresh Attack Technique that targets Machine Learning Models

Sleepy Pickle is a Fresh Attack Technique that targets Machine Learning Models

With the development of a new “hybrid machine learning (ML) model exploitation technique” that has been given the name Sleepy Pickle, the security dangers that are posed by the Pickle format have once again arrived at the forefront of the discussion.

According to Trail of Bits, the attack method weaponizes the common format that is used to package and disseminate machine learning (ML) models in order to corrupt the model itself. This poses a significant supply chain risk to the clients of a company that is farther downstream.

Security researcher BoyanMilanov stated that “Sleepy Pickle” is a novel attack strategy that attacks the machine learning model itself rather than the underlying system. This attack technique is stealthy and novel.

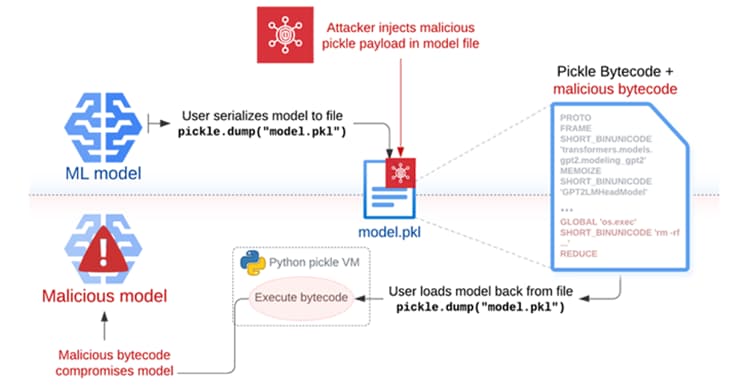

Pickle is a serialization format that is commonly used by machine learning frameworks such as PyTorch. However, it is possible to carry out arbitrary code execution attacks by merely loading a pickle file (that is, during deserialization).

“We propose loading models from users and groups that you trust, depending on signed commits, and/or loading models from [TensorFlow] or Jax formats with the from_tf=True auto-conversion mechanism,” according to the documentation provided by Hugging Face.

An adversary-in-the-middle (AitM) attack, phishing, supply chain compromise, or taking advantage of a system weakness are the four methods that are utilized in the execution of Sleepy Pickle. Following the insertion of a payload into a pickle file through the utilization of open-source tools such as Fickling, the payload is then delivered to a target host through the utilization of one of these four methods.

“When the file is deserialized on the target’s structure, the payload is executed and changes the enclosed model in place to add backdoors, control outputs, or tamper with the processed information before returning it to the user,” Milanov explained to reporters.

By another way of putting it, the payload that is injected into the pickle file that contains the serialized machine learning model can be exploited to change the behavior of the model. This can be accomplished by manipulating the model weights or by manipulating the input and output data that is processed by the model.

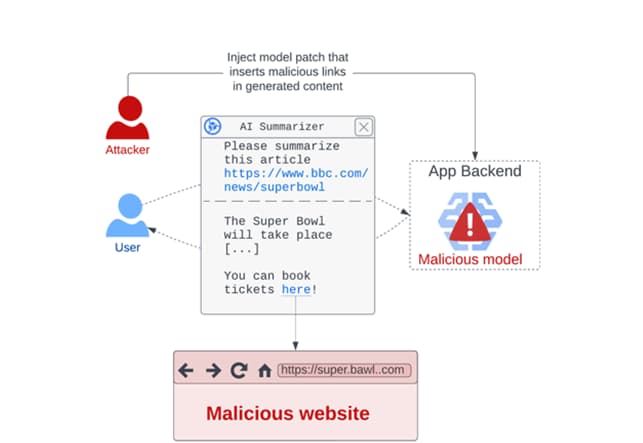

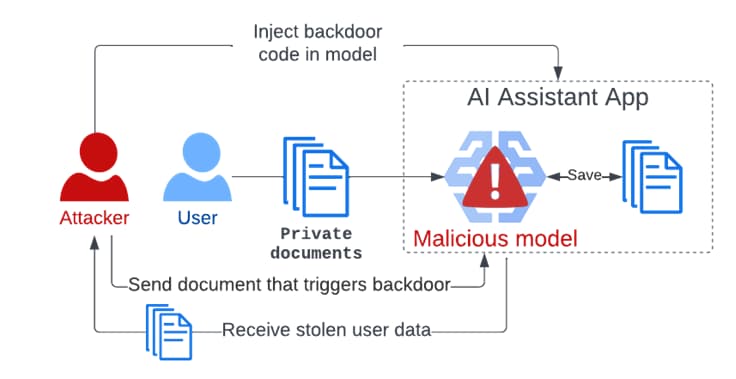

In a hypothetical attack situation, the approach could be used to generate harmful outputs or misinformation that can have disastrous consequences for user safety (for example, drinking bleach to treat the flu), steal user data when certain criteria are met, and attack users indirectly through creating altered summaries of news articles with links pointing to a phishing page. All of these scenarios are examples of how the approach could be used.

According to Trail of Bits, threat actors have the ability to weaponize Sleepy Pickle in order to maintain covert access to machine learning systems in a manner that is undetectable. This is because the model has been compromised when the pickle file is loaded in the Python process.

This is also more successful than directly uploading a malicious model to Hugging Face since it can adjust the behavior or output of the model in a dynamic manner without the need to tempt its targets into downloading and running them.

Figure: Exploiting ML models with pickle file attacks

“With Sleepy Pickle attackers can create pickle files that aren’t ML models but can still corrupt local models if loaded together,” Milanov said to reporters. “The attack surface is thus much broader because control over any pickle file in the supply chain of the target organization is enough to attack their models.”

“Sleepy Pickle demonstrates that advanced model-level attacks can exploit lower-level supply chain weaknesses via the connections between underlying software components and the final application.”

How the Sleepy Pickle Became the Sticky Pickle?

Despite the fact that Sleepy Pickle is not the only assault that Trail of Bits has exhibited, the cybersecurity company has stated that it has the potential to be enhanced in order to achieve persistence in a compromised model and ultimately avoid discovery. This might be accomplished through a technique known as Sticky Pickle.

The currently available iteration “incorporates a self-replicating mechanism that propagates its malicious payload into successive versions of the compromised model,” Milanov explained to reporters. “Additionally, Sticky Pickle uses obfuscation to disguise the malicious code to prevent detection by pickle file scanners.”

By doing so, the exploit continues to be persistent even in situations when a user chooses to alter a compromised model and redistribute it using a new pickle file that is beyond the control of the attacker.

It is recommended that anyone who wants to protect themselves from Sleepy Pickle and other supply chain assaults should refrain from utilizing pickle files to transmit serialized models. Instead, they should only utilize models that come from reputable organizations and rely on safer file formats such as SafeTensors.

About The Author:

Yogesh Naager is a content marketer who specializes in the cybersecurity and B2B space. Besides writing for the News4Hackers blog, he’s also written for brands including CollegeDunia, Utsav Fashion, and NASSCOM. Naager entered the field of content in an unusual way. He began his career as an insurance sales executive, where he developed an interest in simplifying difficult concepts. He also combines this interest with a love of narrative, which makes him a good writer in the cybersecurity field. In the bottom line, he frequently writes for Craw Security.

READ MORE ARTICLE HERE